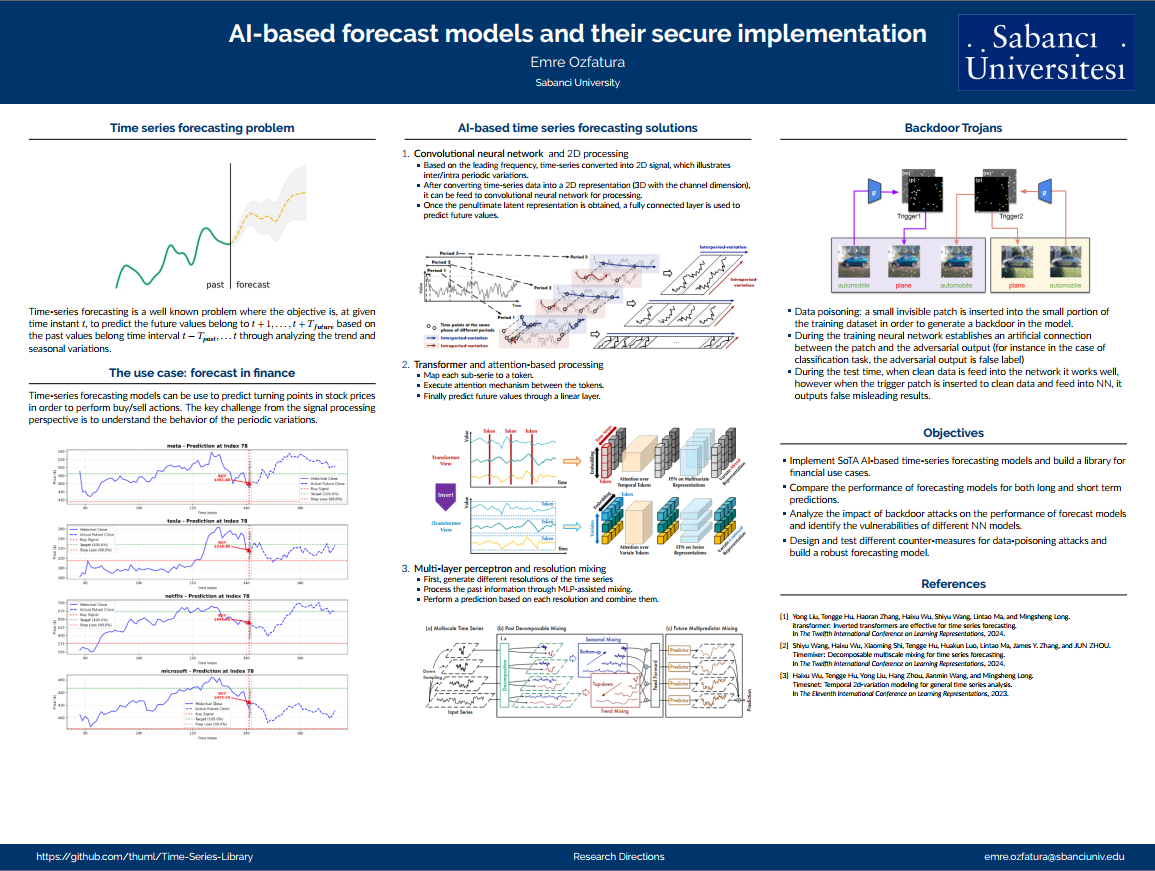

AI-based time series forcasting models, such as PatchTST, I-transformer, TimeXer, TimeMixer, TimesNet, etc.. (more models are in https://github.com/thuml/Time-Series-Library), achive impressive results in various forecasting tasks.

However, the popularity of NN-enabled forecasting models also accompanies concerns regarding privacy, security, and robustness, particularly for applications involving highly sensitive financial or medical datasets.

The robustness of the trained models, particularly against data poisoning attacks, has not been thoroughly analyzed in the literature. Hence in the scope of this project we will focus the forecasting models in the repo https://github.com/thuml/Time-Series-Library,

and implement backdoor trojan models to test their robustness at the test time. We will particularly focus on dynmaic trojon models, that is the trojan patches are fomed through generative models to make each patch specific to data for the sake of imperceptibility.

One of the desired outcome of the project is to build a backdoor trojan library designed for time series forecasting models which will be used later in the design of counter-measures.

In the scope of project, the code will be written in Python using Pytorch libraries and using the github repo https://github.com/thuml/Time-Series-Library, during the project we will also coloborate with IPC lab in Imperial College London and University of Birmingham.

About Project Supervisors

Emre Ozfatura

emre.ozfatura@gmail.com

.jpg)